At Synack, we have written the first comprehensive playbook on how to leverage agentic AI for penetration testing. The goals are clear: First, to educate the security community on agentic AI fundamentals and best practices in pentesting and vulnerability management. Second, to provide an in-depth overview on how Synack is leveraging agentic AI–including the use cases, guardrails, and our newly launched Synack Autonomous Red Agent, or Sara, architecture.

Educating the Security Community on Agentic AI Fundamentals and Best Practices

Agentic AI can do strategic reasoning and planning, continuous learning and adaptation, and even autonomous tool use. Agents are different from gen AI because they don’t just advise–they execute tasks autonomously.

To better understand the technology, we describe how agents work and interact with large language models (LLMs) to reason, plan and execute specific exploits in the real world. We provide some examples including how an agent would approach a SQLi or XSS vulnerability.

To help provide day to day use cases, we discuss how this technology can be leveraged to improve penetration testing, vulnerability triage, threat detection and multi-agent collaboration. Agentic AI systems enhance cybersecurity by performing autonomous penetration testing, validating vulnerabilities in triage, and improving threat detection and investigation. They also enable multi-agent collaboration for complex, multi-phase assessments with minimal human oversight.

Building on an understanding of the technology and the penetration testing use case, we also provide a framework for thinking about the limitations and benefits of traditional penetration testing, PTaaS, and agentic AI-led penetration testing. From a recent ESG report, we know 65% of organizations agree that traditional pentesting does not offer a viable or affordable approach to adequately cover their attack surface. While agentic AI does provide some benefits such as speed and scale, it lacks the creativity of a human, especially for certain vulnerability classes (i.e. business logic vulnerabilities).

Finally, this ebook offers a list of risks and mitigation strategies to help address concerns related to agentic AI penetration testing such as uncalibrated actions and rate limiting.

An In-Depth Look at Sara, Synack’s Agentic AI Architecture and Approach

Sara encompasses hundreds of specialized agents that can perform different security tasks. Called Sara for short, they can help security teams scale pentesting programs by providing cost-efficient pentesting coverage of their attack surface.

What Is A Specialized AI Agent

Specialized AI agents are purpose-built for complex, high-skill tasks within dynamic environments. They combine focused models, domain knowledge, and toolchains to form hypotheses, execute multi-step plans, and adapt to failures and outcomes.

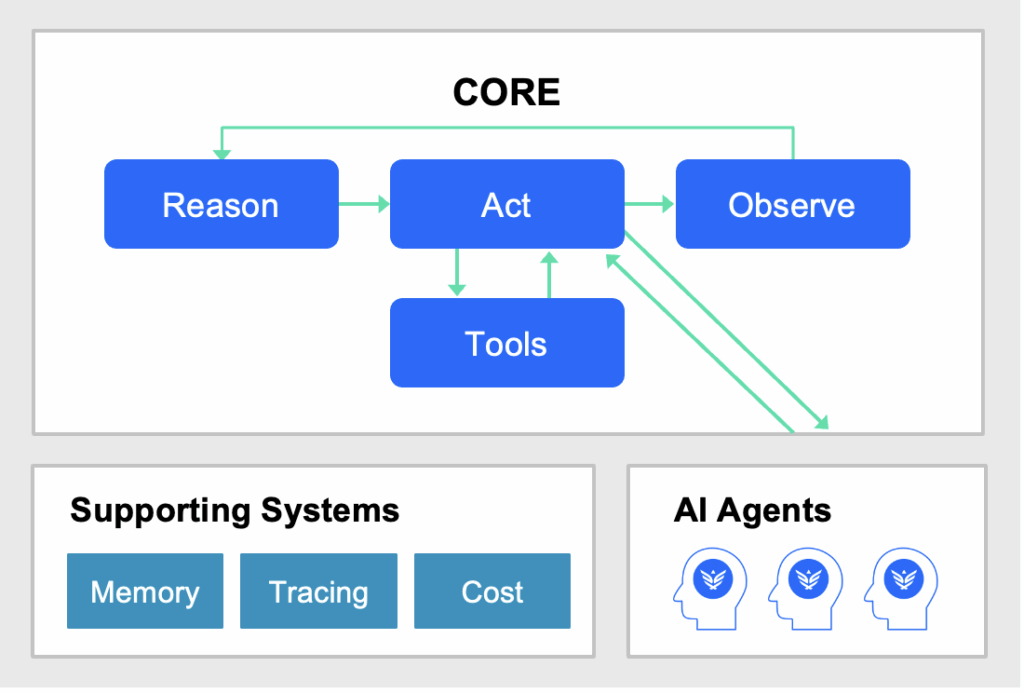

How Synack AI Agents Use the ReAct Pattern

Synack AI agents implement the ReAct (Reasoning and Acting) pattern when running. A ReAct Pattern is a prompting and architectural design strategy that enables agents to weave together reasoning, acting, and observing in a loop until they come up with the right action. With ReAct, each AI agent can reason about security situations, select appropriate tools, and execute actions autonomously. For explainability and traceability, these AI agents can explain their decision process before and after using tools.

How Synack AI Agents Work

Reason

The AI agent analyses the data from its Observe step (e.g., an error message or a successful login) and decides on the next most logical action, guided by its overall goal.

Act

The AI agent executes its decision by calling a tool from its toolset. This is not just running a script. The agent is choosing the right script with the right parameters.

Tools

Tools are the AI agent’s “hands.” They are a library of functions the agent can call, such as port scanners, web crawlers, and custom scripts for specific exploits (e.g., for XSS or SQLi).

Using a Team of AI Agents

With Synack, you have a TEAM, not just one AI agent. For example, a Recon Agent first maps the entire attack surface. Then, it might pass its findings to a Web Specialist Agent to hunt for XSS, which in turn could pass a successful breach to a Privilege Escalation Agent to try and gain admin access.

Observe

The Observe step is where the AI agent collects the results of the action it just took. This is the agent’s sensory input that allows it to understand the outcome of its test and the state of the target environment. This is not a passive step. It is about actively gathering the raw data, error messages, or successful responses from the tools it executes.

Supporting Systems

Leverage Memory and Tracing

The AI agent’s memory prevents it from repeating work or getting stuck in loops. It maintains a record of what actions it has taken, which approaches have failed, and the evolving map of the target system.

Cost

Task complexity and budget constraints can impact the agents and their capabilities.

Download the eBook

100% of organizations are allocating budget to AI initiatives according to a recent survey by Lightspeed Ventures and Fortune, with 86% dedicating 5% or more of their security budget. Understanding agentic AI and its use cases has never been more critical.

Download our eBook to learn more about how to use agents in pentesting